Rohit Rangnekar's Email & Phone Number

Global IoT Leader | Principal Architect & Sr. Manager @ AWS

Rohit Rangnekar Email Addresses

Rohit Rangnekar Phone Numbers

Rohit Rangnekar's Work Experience

SportFin

Advisor

March 2022 to Present

Sr. Architect (Machine Learning, IoT and Analytics)

January 2016 to September 2020

ViaSat

Technical Lead & Senior Big Data Engineer

April 2012 to January 2016

ViaSat

Software Engineer

January 2009 to January 2011

ViaSat

Software Engineering Intern

May 2008 to August 2008

Show more

Show less

Frequently Asked Questions about Rohit Rangnekar

What company does Rohit Rangnekar work for?

Rohit Rangnekar works for Amazon Web Services (AWS)

What is Rohit Rangnekar's role at Amazon Web Services (AWS)?

Rohit Rangnekar is Sr. Manager, Consumer IoT and Connectivity

What is Rohit Rangnekar's personal email address?

Rohit Rangnekar's personal email address is r****[email protected]

What is Rohit Rangnekar's business email address?

Rohit Rangnekar's business email address is r****[email protected]

What is Rohit Rangnekar's Phone Number?

Rohit Rangnekar's phone (**) *** *** 455

What industry does Rohit Rangnekar work in?

Rohit Rangnekar works in the Information Technology & Services industry.

Rohit Rangnekar's Professional Skills Radar Chart

Based on our findings, Rohit Rangnekar is ...

What's on Rohit Rangnekar's mind?

Based on our findings, Rohit Rangnekar is ...

Rohit Rangnekar's Estimated Salary Range

Rohit Rangnekar Email Addresses

Rohit Rangnekar Phone Numbers

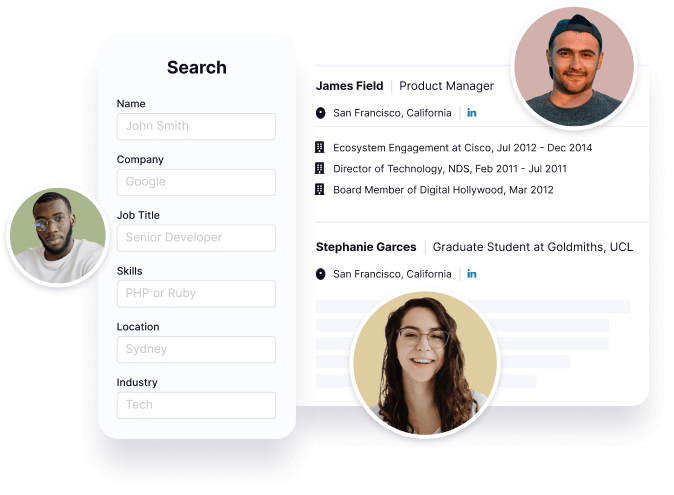

Find emails and phone numbers for 300M professionals.

Search by name, job titles, seniority, skills, location, company name, industry, company size, revenue, and other 20+ data points to reach the right people you need. Get triple-verified contact details in one-click.In a nutshell

Rohit Rangnekar's Personality Type

Introversion (I), Sensing (S), Thinking (T), Perceiving (P)

Average Tenure

2 year(s), 0 month(s)

Rohit Rangnekar's Willingness to Change Jobs

Unlikely

Likely

Open to opportunity?

There's 87% chance that Rohit Rangnekar is seeking for new opportunities

Rohit Rangnekar's Social Media Links

/in/rohitrangnekar /company/amazon-web-services /school/virginia-tech/