Stuart Burley's Email & Phone Number

Chief Development Geoscientist at Murphy Oil Corporation

Stuart Burley Email Addresses

Stuart Burley's Work Experience

Basin Dynamics Research Group, Department of Earth Sciences, The University of Keele

Honorary Professor of Petroleum Geology

July 1998 to Present

Discovery Geoscience

Director

March 2020 to Present

Orient Petroleum Pty Limited

Senior Vice President Exploration

January 2017 to March 2020

Cairn Energy India

Head of Geosciences

May 2008 to April 2014

University of Manchester

Senior Lecturer

July 1988 to February 1995

University of Sheffield

Lecturer

June 1986 to July 1988

Univerisitat Berne

Research Fellow

May 1982 to June 1986

Roberston Research

Sedimentologist

August 1977 to September 1979

Discovery Geoscience

Show more

Show less

Stuart Burley's Education

University of Hull

January 1974 to January 1977

University of Hull

January 1979 to January 1982

Show more

Show less

Frequently Asked Questions about Stuart Burley

What is Stuart Burley email address?

Email Stuart Burley at [email protected] and [email protected]. This email is the most updated Stuart Burley's email found in 2024.

How to contact Stuart Burley?

To contact Stuart Burley send an email to [email protected] or [email protected].

What company does Stuart Burley work for?

Stuart Burley works for Murphy Oil Corporation

What is Stuart Burley's role at Murphy Oil Corporation?

Stuart Burley is Chief Development Geoscientist

What is Stuart Burley's Phone Number?

Stuart Burley's phone +44 ** **** *275

What industry does Stuart Burley work in?

Stuart Burley works in the Oil & Energy industry.

Stuart Burley Email Addresses

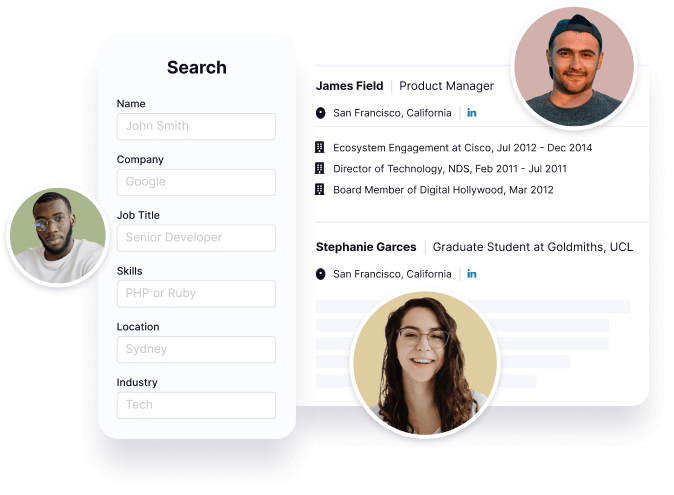

Find emails and phone numbers for 300M professionals.

Search by name, job titles, seniority, skills, location, company name, industry, company size, revenue, and other 20+ data points to reach the right people you need. Get triple-verified contact details in one-click.In a nutshell

Stuart Burley's Personality Type

Extraversion (E), Intuition (N), Feeling (F), Judging (J)

Average Tenure

2 year(s), 0 month(s)

Stuart Burley's Willingness to Change Jobs

Unlikely

Likely

Open to opportunity?

There's 93% chance that Stuart Burley is seeking for new opportunities

Stuart Burley's Social Media Links

/in/stuartburley