NEERAJ JOHRI, PMP's Email & Phone Number

Vice President at Texmaco Rail & Engineering Limited, RAIL EPC DIVISION

NEERAJ JOHRI, PMP Email Addresses

NEERAJ JOHRI, PMP Phone Numbers

NEERAJ JOHRI, PMP's Work Experience

Texmaco Rail & Engineering Limited

Vice President

October 2018 to Present

Kalindee Rail Nirman, a division of Texmaco Rail & Engineering Ltd

HEAD PROGRAMME EXPERT

April 2015 to September 2018

Ansaldo STS

Senior Supply Chain Planner KfW/KMRC

January 2014 to December 2014

Ansaldo STS Transportation Systems India Private Limited

Manager Supply Chain Planning Signalling India

November 2011 to December 2013

Ansaldo STS Transportation Systems India Pvt. Limited

Project Manager

April 2007 to November 2011

Modipon Limited

Graduate Engineer Trainee

June 1986 to February 1987

Show more

Show less

NEERAJ JOHRI, PMP's Education

University of Roorkee, the oldest premier institution of India

January 1982 to January 1986

Indian Institute of Technology, Roorkee

January 1982 to January 1986

IMT CDL

January 2011 to January 2012

Show more

Show less

Frequently Asked Questions about NEERAJ JOHRI, PMP

What is Neeraj Johri email address?

Email Neeraj Johri at [email protected]. This email is the most updated Neeraj Johri's email found in 2024.

How to contact Neeraj Johri?

To contact Neeraj Johri send an email to [email protected].

What company does NEERAJ JOHRI, PMP work for?

NEERAJ JOHRI, PMP works for Texmaco Rail & Engineering Limited

What is NEERAJ JOHRI, PMP's role at Texmaco Rail & Engineering Limited?

NEERAJ JOHRI, PMP is Vice President

What is NEERAJ JOHRI, PMP's Phone Number?

NEERAJ JOHRI, PMP's phone (**) *** *** 188

What industry does NEERAJ JOHRI, PMP work in?

NEERAJ JOHRI, PMP works in the Electrical & Electronic Manufacturing industry.

NEERAJ JOHRI, PMP Email Addresses

NEERAJ JOHRI, PMP Phone Numbers

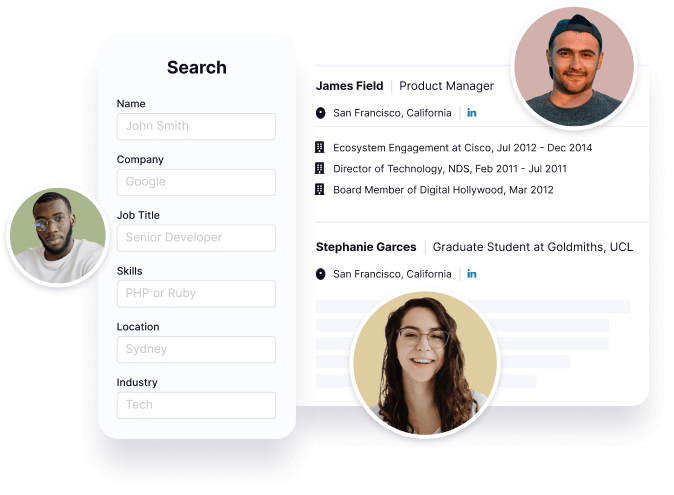

Find emails and phone numbers for 300M professionals.

Search by name, job titles, seniority, skills, location, company name, industry, company size, revenue, and other 20+ data points to reach the right people you need. Get triple-verified contact details in one-click.In a nutshell

NEERAJ JOHRI, PMP's Personality Type

Extraversion (E), Intuition (N), Feeling (F), Judging (J)

Average Tenure

2 year(s), 0 month(s)

NEERAJ JOHRI, PMP's Willingness to Change Jobs

Unlikely

Likely

Open to opportunity?

There's 86% chance that NEERAJ JOHRI, PMP is seeking for new opportunities

NEERAJ JOHRI, PMP's Social Media Links

/in/neeraj-johri-pmp-31578117